Propaganda Red Flag Checker

Analyze Online Content for Propaganda

Paste text from social media, news articles, or messages to identify common propaganda red flags. This tool uses pattern recognition similar to ChatGPT to highlight manipulation techniques.

Note: This tool identifies patterns similar to propaganda, but should not replace independent fact-checking. Always verify claims with trusted sources.

Every day, millions of people scroll through social media, news sites, and messaging apps-seeing headlines that stir anger, fear, or excitement. Some of these stories are true. Many aren’t. And more than ever, they’re designed not just to inform, but to manipulate. This is propaganda, modernized and amplified by algorithms. But there’s a new tool quietly helping people see through the noise: ChatGPT.

What Propaganda Looks Like Today

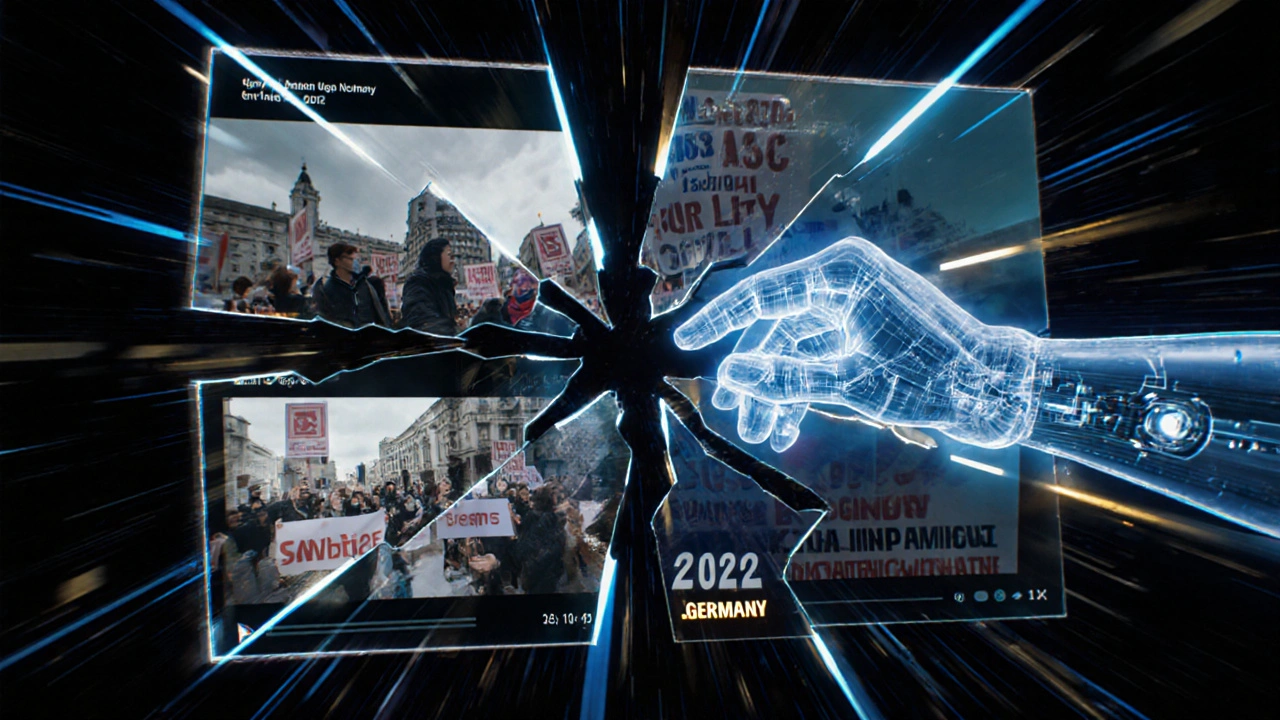

Propaganda isn’t just Soviet posters or wartime radio broadcasts. Today, it’s a viral TikTok clip edited to make a protest look violent when it wasn’t. It’s a Facebook post claiming a politician said something they never did, backed by a fake quote image. It’s a YouTube video using emotional music and shaky footage to push a political agenda. These aren’t mistakes-they’re engineered to trigger reactions, not understanding.

Unlike old-school propaganda that came from a single source, modern propaganda is decentralized. It spreads through bots, influencers, and ordinary users who don’t realize they’re sharing manipulated content. The goal? To polarize, confuse, or distract. And it works. A 2024 study by the University of Queensland found that 68% of Australians who encountered emotionally charged political content online couldn’t tell if it was real or fabricated.

Why Humans Struggle to Spot It

Our brains are wired to believe things that match what we already think. That’s called confirmation bias. When you see a post that says, “The government is hiding the truth about inflation,” and you’re already worried about rising prices, your first reaction isn’t to fact-check-it’s to nod and share.

Propaganda exploits this. It uses familiar language, trusted symbols, and emotional triggers. A well-crafted piece of propaganda doesn’t feel fake. It feels right. That’s why even smart, informed people get fooled. And when you’re scrolling on your phone during a coffee break, you don’t have time to dig into sources.

How ChatGPT Sees What Humans Miss

ChatGPT doesn’t feel emotions. It doesn’t have biases. It doesn’t care if a headline supports your side or your enemy’s. It just analyzes patterns.

Give it a piece of text-say, a tweet claiming “Scientists confirm 90% of climate data is faked”-and it breaks it down:

- It checks for vague or absolute claims (“90%,” “faked,” “scientists confirm”) that lack citations.

- It identifies emotional language designed to provoke outrage (“faked,” “hidden,” “they don’t want you to know”).

- It cross-references the claim with known sources: no major scientific body has made that statement.

- It flags inconsistencies-like a quote attributed to a scientist who died in 2018.

This isn’t magic. It’s pattern recognition. ChatGPT has been trained on millions of articles, reports, and fact-checks. It knows how real science writing sounds versus how propaganda mimics it. And it can do this in seconds.

One user in Brisbane tested this by pasting five viral posts from their Facebook feed into ChatGPT. Three were flagged as likely propaganda. One was a legitimate news article. The fifth? A satirical meme they didn’t realize was satire. ChatGPT called it out: “This uses exaggerated humor to mock a real policy, but without context, it could be mistaken for factual.”

Real-World Use Cases

People are already using ChatGPT to fight propaganda-not as a replacement for critical thinking, but as a second pair of eyes.

Teachers in Queensland are using it to help students analyze news articles. Instead of just saying “this is fake,” they ask ChatGPT: “What are three signs this article might be misleading?” The tool gives them a checklist: lack of author, no sources, loaded words, emotional manipulation, and inconsistent dates.

Community groups in regional Australia are using it to verify claims made during local council meetings. Someone posts a video claiming “the new bike path will cost $2 million and benefit only 12 people.” ChatGPT pulls up public budget documents, compares the numbers, and says: “The actual budget line item is $450,000, and the project includes 14 suburbs.”

Even journalists are turning to it. A reporter in Melbourne used ChatGPT to trace the origin of a viral video showing “armed protesters storming a hospital.” The AI noticed the video’s metadata matched a 2022 clip from a different country. It also spotted a mismatch in the flag being waved-changed from the wrong national flag. The story was debunked before it went mainstream.

What ChatGPT Can’t Do

It’s not a truth machine. It doesn’t have access to live databases or real-time news. It can’t verify a video’s location unless you give it context. It doesn’t know if a source is trustworthy unless you tell it who they are.

It also makes mistakes. Sometimes it’s too cautious and labels real stories as propaganda. Other times, it misses cleverly disguised disinformation-especially if the text is well-written and uses real facts twisted out of context.

That’s why it’s a tool, not a judge. Think of it like a spellchecker for lies. It finds typos in logic, grammar in deception, and inconsistencies in claims. But you still have to read the whole sentence.

How to Use ChatGPT to Check Propaganda

If you want to start using ChatGPT to spot manipulation, here’s how:

- Copy the text-don’t just describe it. Paste the exact words, including punctuation.

- Ask specifically: “Is this likely propaganda? List three red flags.”

- Check the sources: If ChatGPT mentions a study or organization, look them up yourself. It can hallucinate references.

- Compare versions: Paste the same claim from two different sources. See how the wording changes.

- Look for emotional triggers: Words like “they,” “them,” “secret,” “they don’t want you to know,” or “everyone knows” are classic propaganda signals.

Don’t trust the first answer. Ask follow-ups: “Why is this claim misleading?” or “What’s the opposite of this?” The more you probe, the clearer the picture becomes.

Why This Matters Right Now

2025 is shaping up to be one of the most manipulated election years in history. In Australia, Canada, the U.S., and across Europe, foreign and domestic actors are testing new ways to sway public opinion. Deepfakes are getting cheaper. AI-generated text is harder to detect. And social platforms still prioritize engagement over accuracy.

But here’s the good news: tools like ChatGPT are leveling the playing field. For the first time, ordinary people have access to something that used to be only available to intelligence agencies or fact-checking nonprofits: rapid, scalable analysis of misinformation.

This isn’t about replacing journalists or experts. It’s about giving everyone the power to ask: “Does this make sense?” before they hit share.

The Bigger Picture

Propaganda thrives in silence. When people don’t question what they see, it spreads. When people start asking questions-especially with tools that help them think clearly-it loses power.

ChatGPT won’t fix the internet. But it’s helping people break the cycle of automatic sharing. It’s teaching users to pause. To look closer. To demand proof. And that’s the first step toward a less manipulated world.

It’s not about trusting AI. It’s about using AI to trust yourself more.

Can ChatGPT detect deepfake videos?

No, ChatGPT can’t analyze videos or images directly. It can only work with text. But if you describe what’s in a video-like the claims being made or the context-it can help you spot inconsistencies. For example, if a video says a protest happened in Sydney on June 5, but ChatGPT knows no major events occurred there that day, it can flag that as suspicious. To check videos, you need tools like InVID or Amnesty’s YouTube DataViewer.

Is ChatGPT biased in how it identifies propaganda?

ChatGPT doesn’t have political beliefs, but its training data includes content from many sources, including those with bias. That means it might sometimes treat left-leaning or right-leaning claims differently if they’re phrased similarly. To reduce this, always ask for specific red flags instead of yes/no answers. For example, ask: “What are three linguistic signs this text might be manipulative?” This forces it to focus on structure, not ideology.

Can I use ChatGPT for free to check propaganda?

Yes. The free version of ChatGPT (GPT-3.5) is powerful enough to detect most common propaganda tactics. You don’t need the paid version unless you’re analyzing hundreds of pieces of text daily. For personal use-checking a viral post or a news headline-the free version works well.

What’s the difference between propaganda and fake news?

Fake news is false information presented as fact. Propaganda is information-true, false, or mixed-that’s used to influence opinions or behavior. All propaganda isn’t fake news, but fake news is often used as propaganda. For example, a true statistic about unemployment, if presented without context to blame a specific group, becomes propaganda. The goal isn’t just to lie-it’s to move people.

Should I rely on ChatGPT to fact-check political claims before voting?

Use it as a starting point, not a final answer. ChatGPT can help you identify misleading claims, but you should always cross-check with trusted sources like ABC News Fact Check, Snopes, or official government data. The best practice: if ChatGPT flags a claim, search for “[claim] + fact check” in a search engine. Look for multiple independent sources that agree.

Next Steps: Build Your Own Propaganda Detector Habit

Start small. Pick one social media platform you use daily. Before you share anything that makes you feel strong emotion-anger, fear, or triumph-pause. Copy the text. Paste it into ChatGPT. Ask: “Is this likely propaganda? What are three red flags?”

Do this for a week. You’ll start noticing patterns. You’ll see how often emotional language replaces evidence. You’ll learn to distrust vague claims. And you’ll stop sharing things just because they feel right.

That’s not just smart. It’s powerful. In a world full of noise, the most radical thing you can do is pause-and ask for proof.

Write a comment