Understanding Propaganda in the Digital Age

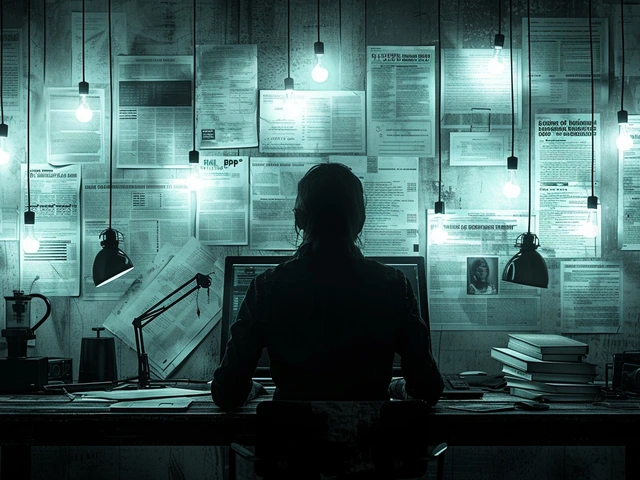

Our journey into the world of propaganda detection begins with a fundamental question: What exactly is propaganda in today's digital landscape? Traditionally, propaganda refers to the systematic effort to shape perceptions, manipulate opinions, and direct behavior to achieve a response that furthers the desired intent of the propagandist. With the advent of the internet and social media platforms, the mechanisms and velocity of propaganda dissemination have evolved dramatically. This digital proliferation has made it exceedingly difficult to identify the origins of misleading information and its intents—ranging from political agendas to commercial interests, creating an urgent need for sophisticated detection tools.

In this context, artificial intelligence (AI), particularly advanced language models like ChatGPT, emerges as a promising ally. These AI systems are trained on vast datasets comprising diverse text from the web, learning to mimic human language patterns with remarkable accuracy. Their potential in discerning subtleties in language that may indicate propaganda is immense, offering a glimmer of hope in the ongoing battle against misinformation.

The Role of ChatGPT in Propaganda Detection

ChatGPT, developed by OpenAI, is at the forefront of this battle. It's a powerful language model that has demonstrated an uncanny ability to understand and generate human-like text based on the training it has received. But how exactly does ChatGPT fit into the puzzle of propaganda detection? The answer lies in its architecture and training process. ChatGPT can be fine-tuned to recognize patterns and anomalies in text that often characterize propaganda—such as emotional manipulation, biased language, and logical fallacies.

For instance, by analyzing text inputs for these specific markers, ChatGPT can flag potentially manipulative content for further review. This does not mean ChatGPT works in isolation. Humans still play a crucial role in the verification process, combining AI's computational power with human judgment and contextual understanding. Such a synergistic approach enhances our ability to sift through vast amounts of information, highlighting content that warrants suspicion or deeper investigation.

Challenges and Limitations

However, the path to effective propaganda detection using AI is fraught with challenges. One of the primary concerns is the potential for false positives or negatives, which could either unfairly label genuine content as propaganda or fail to detect actual instances of disinformation. These issues often stem from the nuances of language and context, areas where even the most sophisticated AI can sometimes stumble. Additionally, the arms race between detection methods and propagandists' evolving tactics means that systems like ChatGPT must be continuously updated to keep pace with new techniques and technologies employed to spread disinformation.

Moreover, ethical concerns around privacy, censorship, and the potential misuse of AI for surveillance or counter-propaganda efforts cannot be ignored. As we integrate AI into our information ecosystems, striking a balance between effective detection and safeguarding individual rights becomes paramount. We must tread carefully, ensuring that our pursuit of truth does not inadvertently trample on the values we seek to protect.

Incorporating ChatGPT into Daily Information Consumption

So, how can individuals and organizations harness ChatGPT for propaganda detection in practical terms? The integration of AI into our daily information consumption begins with awareness and education. Understanding the capabilities and limitations of tools like ChatGPT is the first step towards leveraging them effectively. For individuals, this might involve using AI-assisted browser extensions or apps that analyze news articles, social media posts, and other content for potential signs of propaganda.

Organizations, on the other hand, can incorporate ChatGPT into their information verification workflows, providing an additional layer of analysis to help identify biased or manipulative content before it reaches wider audiences. Training staff on how to interpret AI-generated insights and combine them with human expertise can enhance the accuracy and effectiveness of propaganda detection efforts.

The Future of Propaganda Detection with AI

Looking ahead, the role of AI in combating propaganda is only set to grow. Continuous advancements in machine learning and natural language processing technologies promise to enhance AI's ability to understand the intricacies of human language and the subtleties that differentiate genuine discourse from manipulation. Collaborative efforts between technologists, researchers, policymakers, and civil society will be crucial in steering these developments in a direction that maximizes their potential for good while mitigating risks.

The journey toward a world where information can be consumed with confidence, free from the taint of propaganda, is long and complex. Yet, with tools like ChatGPT at our disposal, we are better equipped than ever to navigate this landscape. The envisioned future is not one where AI replaces human judgment but rather augments it, enabling us to engage with the digital world with a higher degree of discernment and responsibility. The battle against propaganda is ongoing, but with AI as our ally, it's a battle we are increasingly capable of winning.

Write a comment